As a data scientist working in industry, I frequently witness the impact a machine learning application can make. This impact often has a cascade of downstream effects which are inconceivable to a data scientist without enough domain knowledge. Nevertheless, under the widespread motto, “move fast and break things,” in tech industry, ML practitioners tend to care little about the size of their products’ impact. In certain cases, they even overlook scientifically rigorous evaluation of their products. These phenomena have been greatly worrying me ever since I started my career in industry. My concern has deepened due to many recent instances of AI applications reproducing human bias on a massive scale and aggravating existing socioeconomic problems.

Recently, I found out about the event, The Tech Policy Workshop at the Center for Applied Data Ethics at USF via Dr. Rachel Thomas on social media, as I have been enjoying her blog posts and talks. She is smart, honest, and concerned about the welfare of people and society. Thus, I assumed that the workshop would be beneficial in many ways. The lineup was also very interesting because the speakers had diverse backgrounds. Additionally, the low registration cost was helpful.

I have been interested in fairness, accountability, and transparency of ML for several years, and thus have been attending events related to the topics. Compared with those events, I found this workshop unique in two ways. First, the organizers made a great effort to gather experts from a wide variety of domains ranging from computer science to policymaking. This makes sense because tech ethics and policy are multidisciplinary issues. Second, by choosing the format of a workshop, instead of a conference, the event was more interactive. There were several hands-on exercises, which encouraged valuable discussions among the attendees. The workshop exceeded my expectations and I felt grateful for the opportunity to attend.

Interactive ethics exercise on facial recognition

Every session was interesting and unique in its own way. However, the main lesson I got out of the workshop came from the ethics session led by Irina Raicu from Santa Clara University. Ethics can be vague, abstract, and even esoteric but Irina made it sound accessible. She compared it to birdwatching; the more we learn about ethics, the more easily we can notice ethical issues in the world. Instead of giving a lengthy philosophy lecture, she taught us various easy-to-understand ethical “lenses”. She then asked us to use these in an exercise based on a real-world case about face recognition. The case was about a dataset1 that ML researchers at IBM created to train a fairer face recognition model.

I was already familiar with the case. Joy Buolamwini, a ML researcher at MIT, published a study2 that revealed gender and racial bias in the training dataset in many commercial face recognition platforms including IBM’s. After this paper was published, IBM was forthcoming about the issue and they promised to improve their application. It is safe to assume that IBM researchers had good intentions when they published a new training dataset. Unfortunately, we found that this new dataset still had many problems, especially related to data privacy.

During the exercise, we applied different ethical lenses to address which ethical values were pursued or violated. Since we were a group of data scientists, policymakers, and activists, there were a variety of ideas. I admit that in the beginning of the exercise, I was somewhat frustrated because ethics cannot be easily optimized. This meant that we might not be able to reach conclusions easily. However, once we started discussion, I realized that the true value of the exercise was not about finding the right answer quickly, but about evaluating various perspectives especially when multiple ethical values were in conflict. The exercise also taught me that ethical decision making is a highly dynamic process that requires a diverse set of opinions. I started thinking this type of exercise would be beneficial for tech engineers to change the way they think.

Ethics training for tech workers

Granted, as Chris Riley at Mozilla pointed out during his session, teaching ethics to engineers may not be the best way to solve the tech-related problems in our society. After all, ethics focuses on individuals. Typically, socioeconomic problems are solved more effectively through legislation, regulations, and policies. However, several speakers hinted that training engineers to learn about ethical decision making can still be useful.

Tech industry has great power and influence on our society

As Prof. Elizabeth E. Joh from UC Davis mentioned, tech industry has a significant amount of power these days. According to her, in certain circumstances, police must even drop their investigation so as not to violate the non-disclosure agreement related to the procurement of technological devices they use. She gave an example of police body-cams produced by the company, Axon, who dominates the market. They make almost every decision on how the device works, how and where data is stored and maintained, and so on. This means the management decision in the tech company can have a significant impact on the general public. Guillaume Chaslot from Algo Transparency delivered a similar message using an example from YouTube. It showed how slow and passive YouTube’s response was on content moderation. This is similar to Facebook’s naïve approach that contributed to the dissemination of disinformation over the internet, which created numerous sociopolitical problems all over the world.

Tech workers also have power

Multiple speakers emphasized that tech workers have great power as well. Recent tech employee walkouts demonstrate that power. Some even affected their companies’ decisions on certain social issues. The news3 about Google recently hiring firm known for anti-union efforts implies that companies now have recognized employee unrest as a threat. ML practitioners who work closely on sensitive datasets can wield even greater power. Kristian Lum from Human Rights Data Analyses Group shared a disturbing example of a ML application used at a government branch. A ML practitioner manipulated the results by hand-selecting model coefficients from multiple versions of a dataset, but this only came to light much later during an audit. Based on my conversations with other data scientists, proper oversight or formalized review of technical work is still missing in many industries. Under these circumstances, the responsibility to provide transparency and accountability falls to individual ML practitioners. Since building a ML application is a complex process, technical debt can aggravate quickly.

What I can do as a tech worker

The fact that ethics is about an individual’s decisions makes me think that there should be something I can do and that I should be aware of the responsibility that comes with my power as a tech worker. I can share a few things I try at work to make a change even though they are small. First, I seek out resources to learn about best practices in ML across the industry and to establish them at work regarding transparency and accountability. My team uses Model Cards4, which summarize how models are trained and how they are supposed to be used, and Datasheets5, which describe how data are collected, used, and processed. Second, to prevent technical debt, I often ask for reviews so that my work is seen by many different stakeholders. Another effort is bringing my work to a public space through publications, seminars, or conferences. For the tech ethics and policy issues, I try to take advantage of any opportunities inside my job (e.g., lunch-and-learn sessions) or outside (e.g., house parties or friends’ gatherings) to raise awareness. Finally, I try to learn more about the topics via events like this workshop.

What I can do as a citizen

I spent a lot of time thinking about what I can do as a tech worker, but I was forgetting something more important. At the end of the workshop, Prof. Elizabeth E. Joh talked about how tech companies wield their massive power in surveillance and policing. An attendee asked her whether there is anything we can do to make a change. Rather than answering his question, she asked him how many times he has previously attended city council meetings of his own city. She said there is still not enough awareness about this problem among the general public nor even momentum to create strong public opinion. That is why we need to raise our voices to address the issue and demand a change. Carrying out my duty as a citizen by speaking up was the one that I have overlooked.

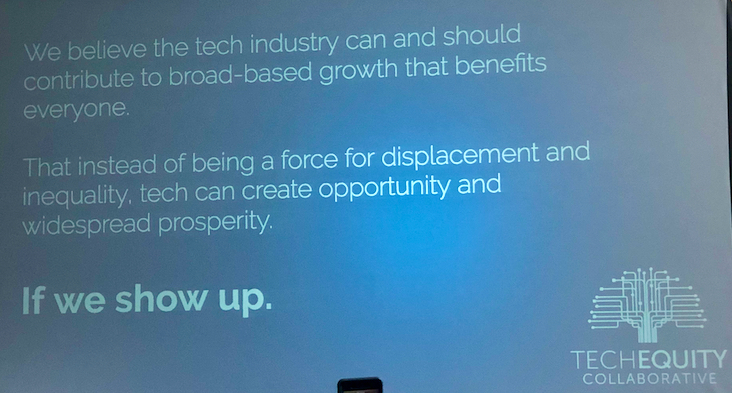

At the workshop, I heard from Bay Area government officials about how they try to protect their citizens. Catherine Bracy from TechEquity Collaborative discussed organizations that help protect local communities from tech-related problems. Even though the Bay Area has been facing many problems such as housing and severe income inequality due to tech industry, it also has become the place where movements to fight back are pioneered. I think it has happened here because 1) it is the epicenter of the tech boom, 2) the severity of the problems is extreme, and 3) people are more aware of and sensitive about these issues.

Other local governments face a very different situation. The Litigating Algorithms report6 by the AI Now Institute mentions that many local governments are drawn to the idea of implementing automated decision processes (ADP) because officials expect ADP to save money. Since the governments do not have affordable access to the right talent, they end up outsourcing the work to cheap vendors who often do poor execution and do not provide enough transparency. The report lists many examples of ADP that went wrong and harmed many people.

Responsibility as a citizen and a tech worker

This workshop gave me an idea of how to combine my duty as a citizen and my duty as a tech worker. Government officials I spoke to at the workshop said it is extremely difficult for governments to catch up with the tech industry. It makes sense—policymaking is an inherently slow process but tech is all about fast developments and adaptations. To reduce the gap, we can start organizing small groups of tech workers to help nonprofits and local governments navigate the ever-changing tech space more efficiently. In the long run, these groups can form an advisory board or a civilian oversight committee to monitor tech-related issues in local communities such as predictive policing. By then, of course, these groups will include not only tech workers but other stakeholders such as local residents, legal experts, social scientists, activists, and more. This way, tech workers like myself can provide our local communities with technical expertise. At the same time, I will have a better idea of the real impact that my work makes. I optimistically believe that as we tech workers engage with our communities, we will change tech culture positively.

Final thoughts

In addition to learning much about tech ethics and policy, I found the workshop particularly special because I met great people from a variety of backgrounds, and I made new friends. I am sure many attendees had the same experience. It’s a rare occasion to have a diverse group of people sharing many concerns about the future in the same place for two days of honest discussions.

When I saw ads for the event, I hesitated whether I should register. I was unsure whether I would belong there because policy is outside of my experience. During the workshop, I confessed this to other attendees. Some said they had similar hesitations. After listening to my confession, Shirley Bekins, a housing activist who sat beside me, said with a big smile, “Of course you should be here!”

References

Footnotes

M. Merler, N. Ratha, R. S. Feris, and J. R. Smith, “Diversity in Faces,” ArXiv190110436 Cs, Apr. 2019.↩︎

J. Buolamwini and T. Gebru, “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification,” p. 15.↩︎

N. Scheiber and D. Wakabayashi, “Google Hires Firm Known for Anti-Union Efforts,” New York Times, 20-Nov-2019.↩︎

M. Mitchell et al., “Model Cards for Model Reporting,” Proc. Conf. Fairness Account. Transpar. – FAT 19, pp. 220–229, 2019.↩︎

T. Gebru et al., “Datasheets for Datasets,” ArXiv180309010 Cs, Apr. 2019.↩︎

R. Richardson, J. M. Schultz, and V. M. Southerland, “LITIGATING ALGORITHMS 2019 US REPORT:,” p. 32.↩︎