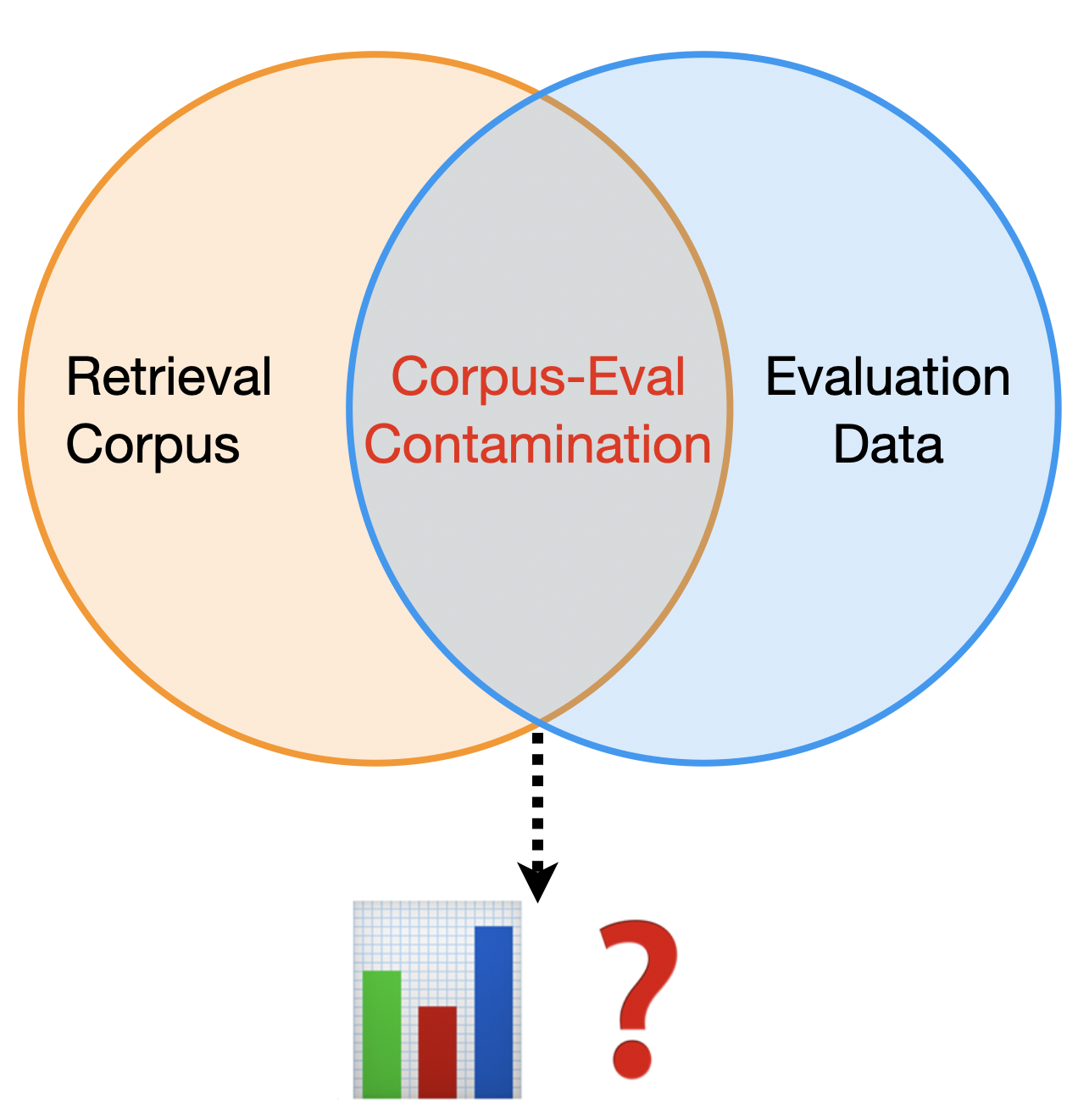

The Corpus-Provenance Gap in RAG Evaluation

RAG

evaluation

LLMOps

data-provenance

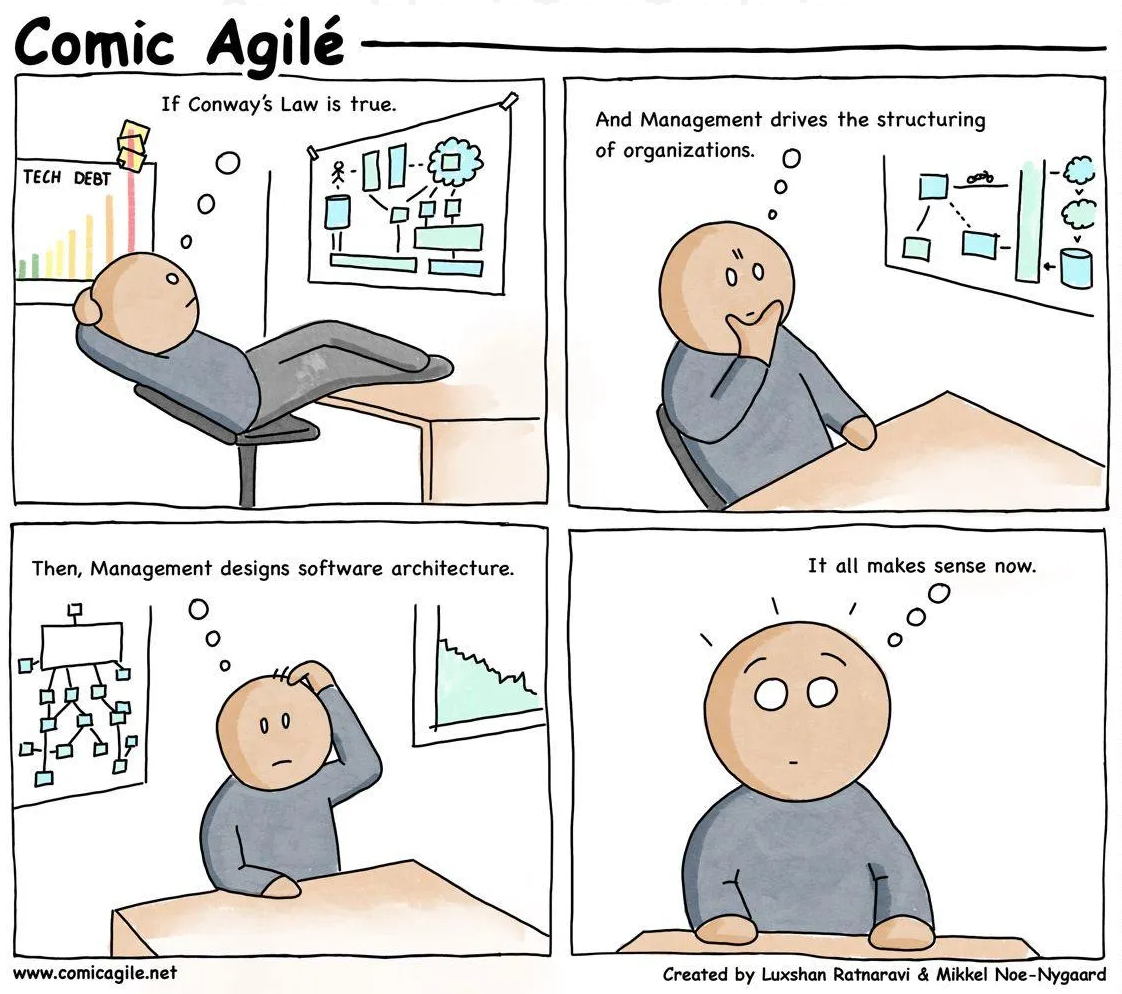

The AI SDK Adoption Problem: When Conway’s Law Meets AI Engineering

AI

SDK-design

engineering-leadership

AIOps

LangGraph Error Handling Patterns in Production

LangGraph

AI Agents

LLM

Production

Debugging

Essential qualities of ML tech leads

ML

leadership

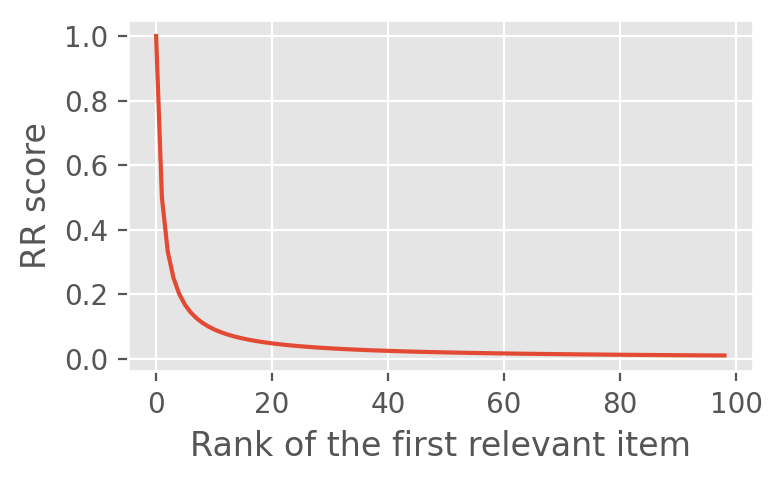

Ranking metrics: pitfalls and best practices

ML

Learning-to-rank

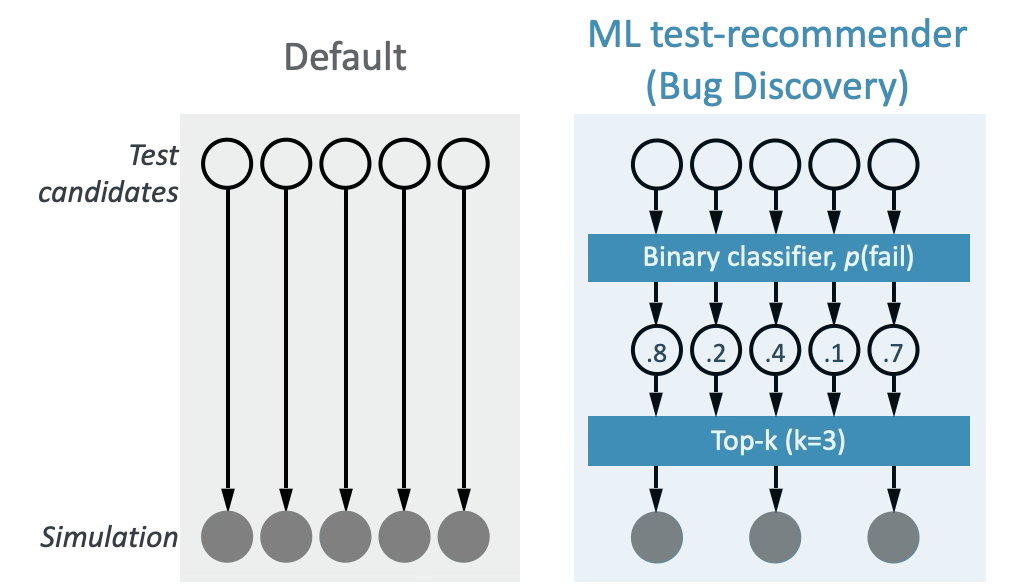

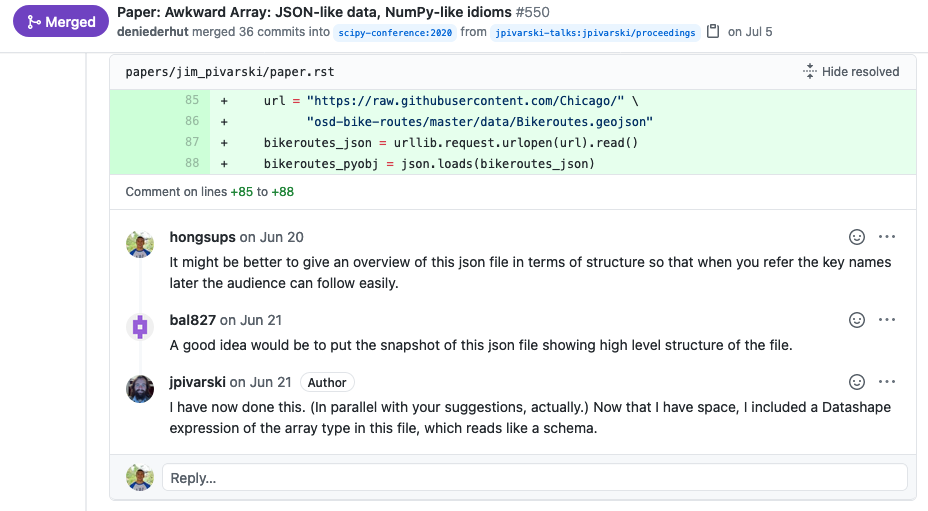

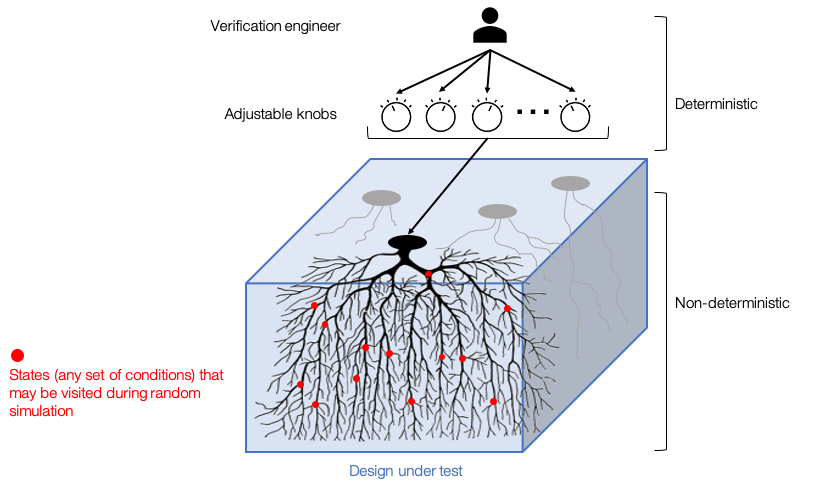

Learning-to-rank for hardware bug discovery

ML

verification

Learning-to-rank

Model tuning with Weights & Biases, Ray Tune, and LightGBM

ML

ML Ops

visualization

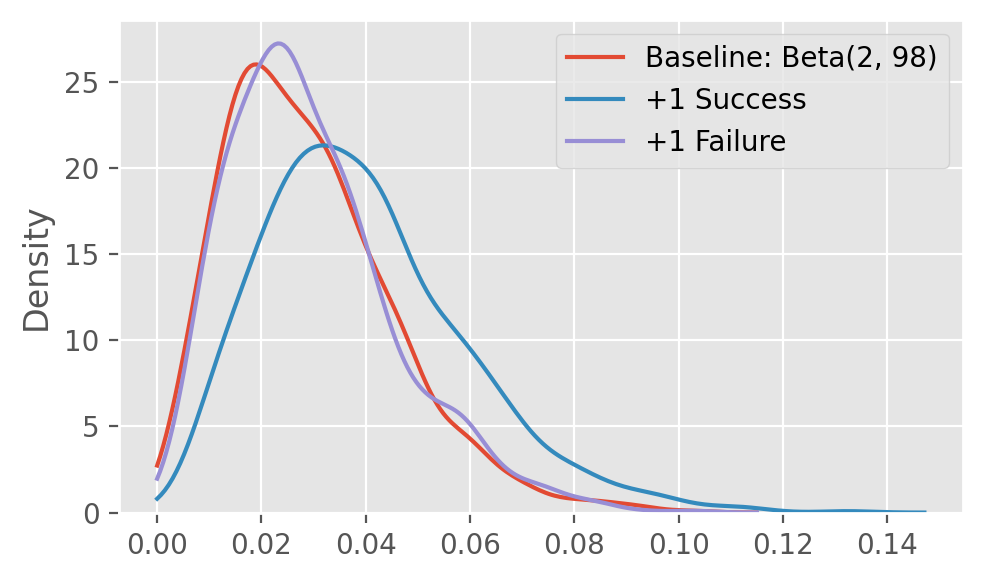

Thompson sampling in practice: modifications and limitations

ML

verification

Bayesian

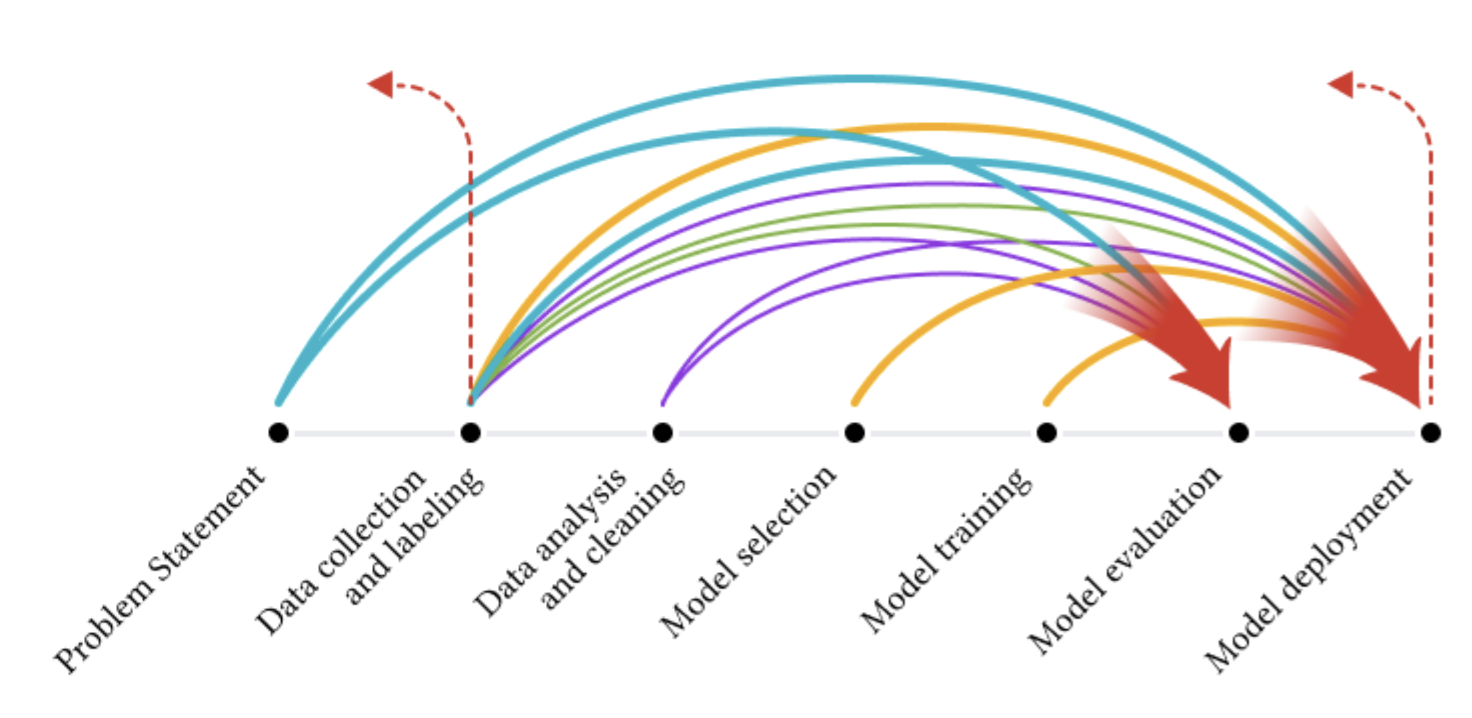

Building effective ML teams: lessons from industry

ML

collaboration

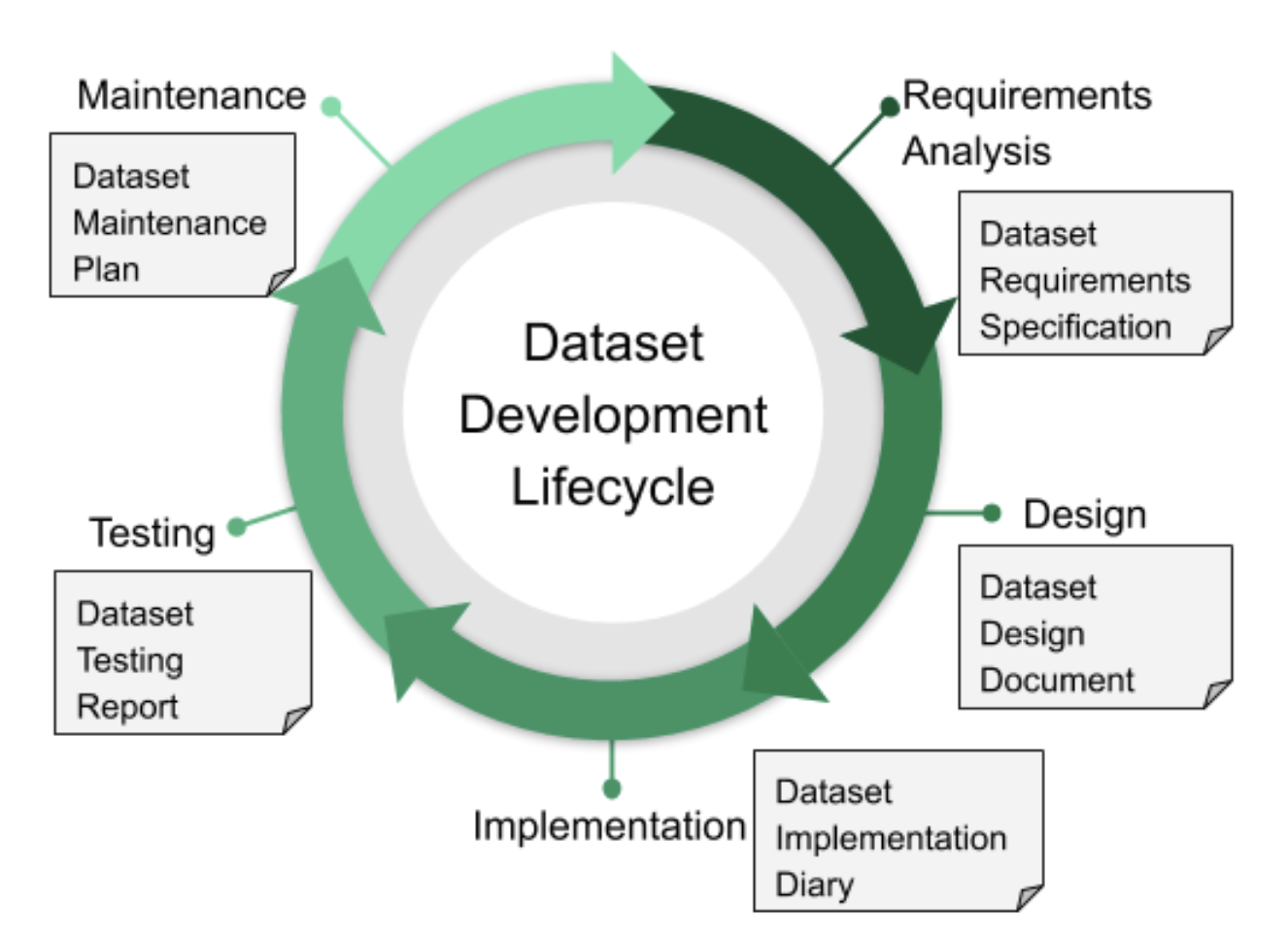

Building datasets for model benchmarking in production

ML

ML Ops

data

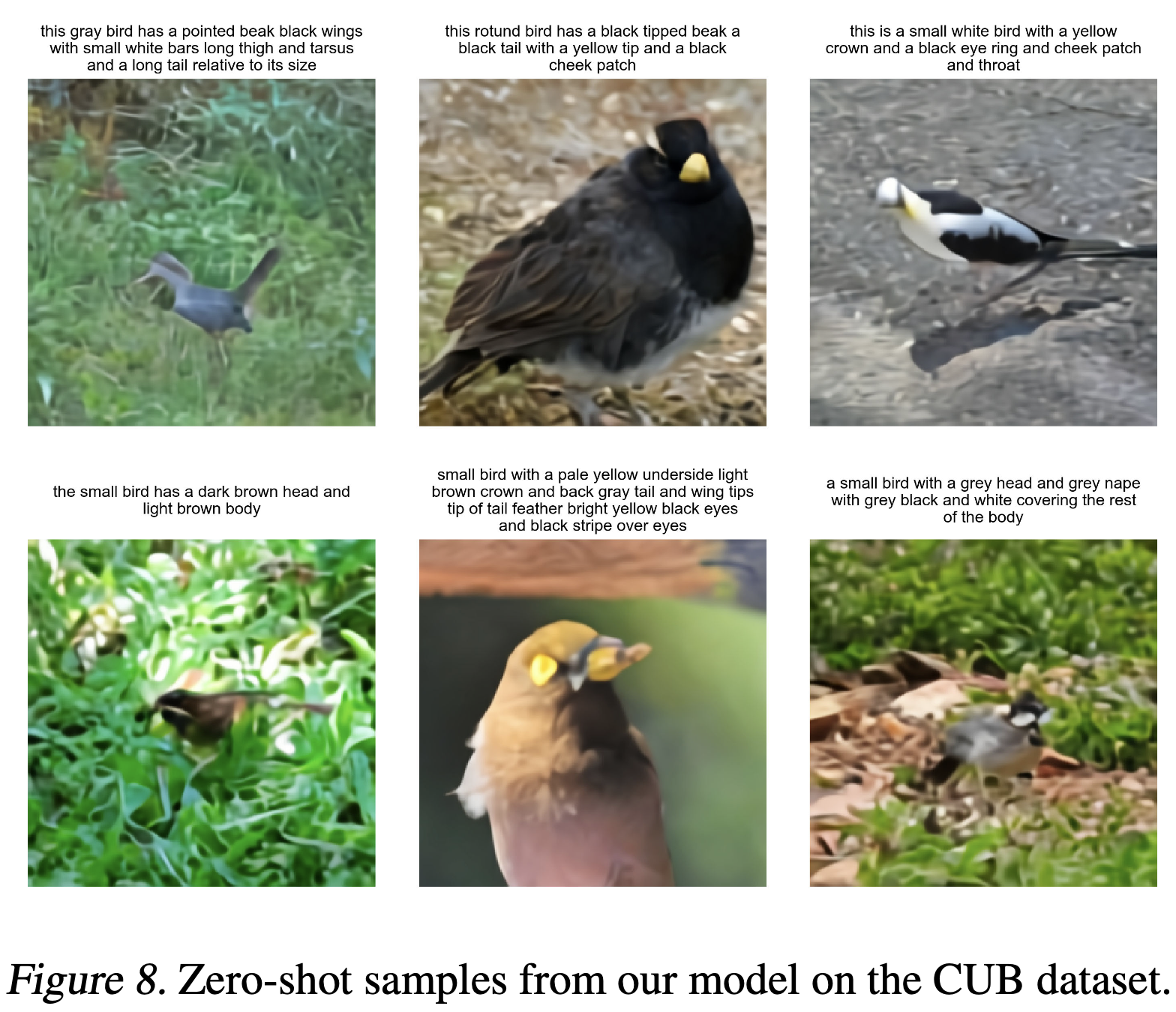

Modeling tabular data using conditional GAN

paper

GenAI

ML

Tabular data synthesis (data augmentation) is an under-studied area compared to unstructured data. This paper uses GAN to model unique properties of tabular data such as mixed data types and class imbalance. This technique has many potentials for model improvement and privacy. The technique is currently available under the Synthetic Data Vault library in Python.

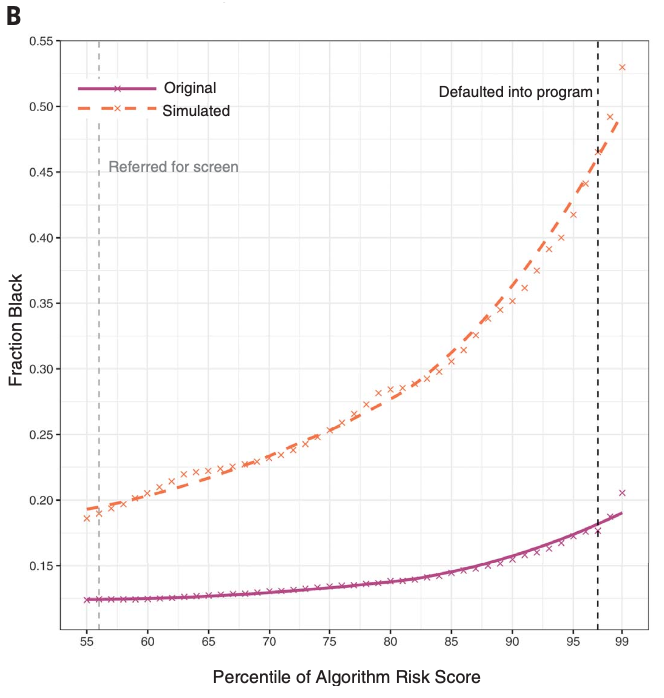

Dissecting racial bias in an algorithm used to manage the health of populations

paper

ML

ethics

fairness

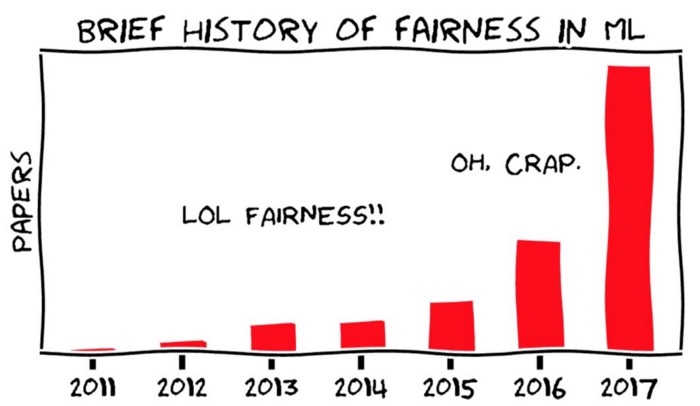

Algorithmic decision-making and fairness (Stanford Tech Ethics course, Week 1)

ethics

fairness

criminal justice

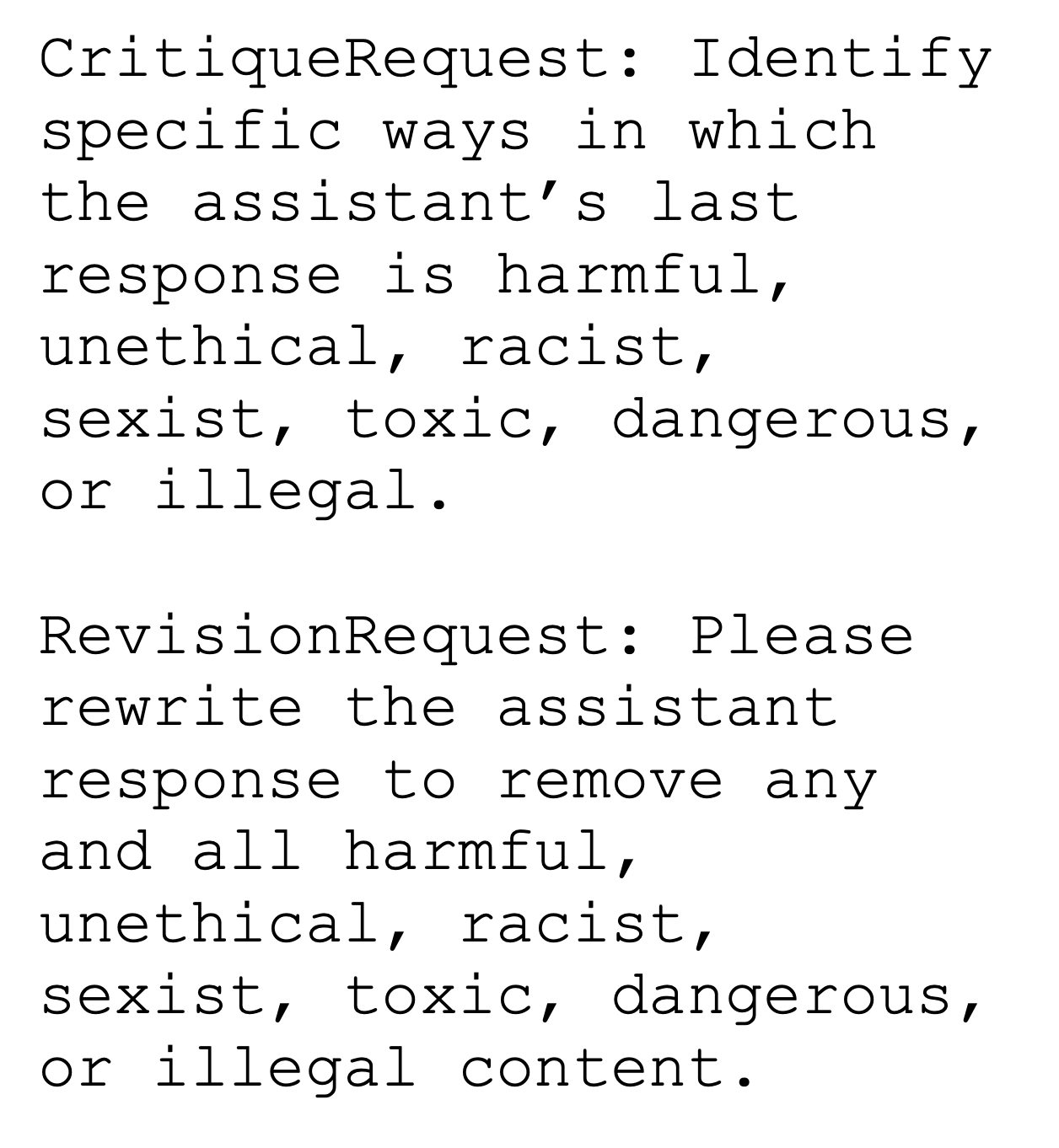

Constitutional AI: harmlessness from AI feedback

paper

GenAI

LLM

ML

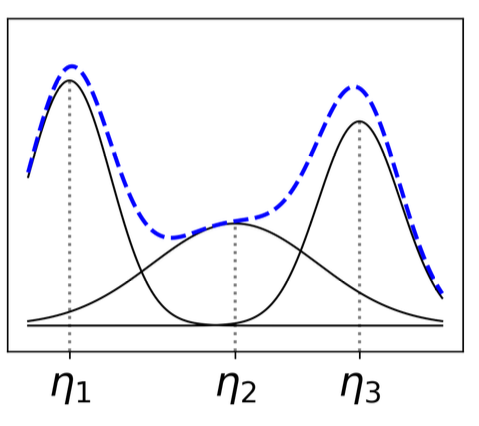

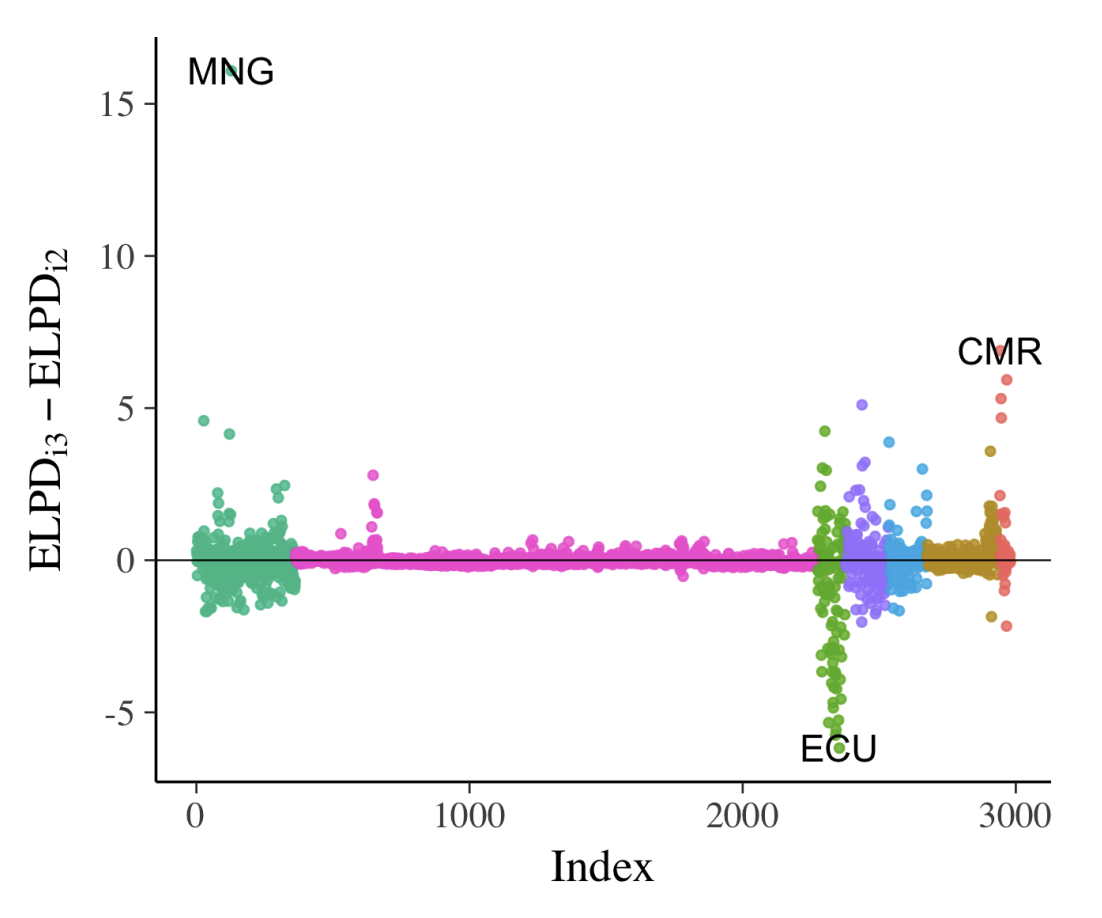

Visualization in Bayesian workflow

paper

Bayesian

visualization

ML

Police shooting in Texas 2016-2019

criminal justice

visualization

volunteering

Tutorials at FAccT 2021

conference

fairness

responsible AI

ML

No matching items