Imagine designing a highly complex machine. In order to be certain that it functions as its design specifies and does not have any bugs, you would need to test every aspect of the design exhaustively. If the machine is controlled by a set of knobs that can be turned on and off, this verification process can get exponentially complex. For instance, with a machine that has 100 binary on-off knobs, then 2100 tests need to be run to cover all possible combinations. If we assume that a single test takes one second to run, this equates to 1022 years of testing. For present-day microprocessors, it is even more challenging. There can be thousands or tens of thousands of two-state flip-flops in a single microprocessor. Therefore, it is impossible to verify microprocessor designs exhaustively.

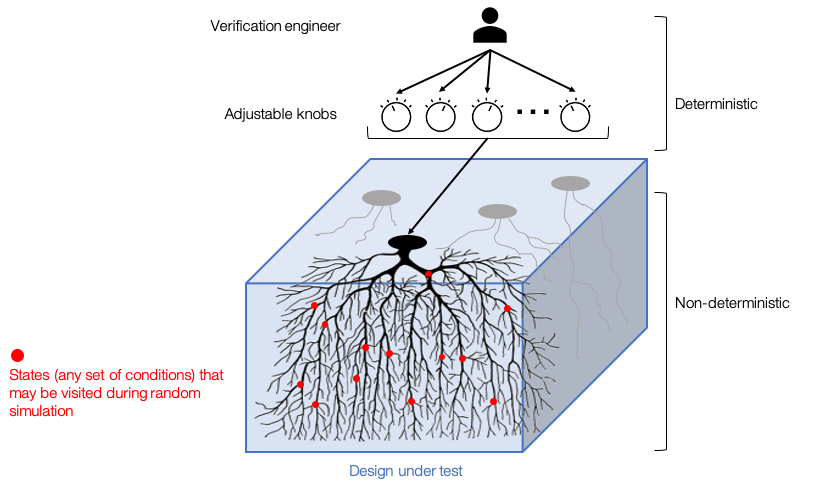

To work around this problem, hardware verification engineers use a method called random-constraint testing. This is more sophisticated than simple random adjustment of knobs – it is a hybrid approach of manual control and randomization. Engineers can direct the test behavior to a degree by setting constraints for the tests using adjustable knobs. Once constraints are set, the rest of the verification process depends on randomization; the knobs start subsequent processes that are stochastic, which stimulates various parts of the design. This way, engineers can explore the design randomly under the constraints they have set.

This method works well when engineers have not explored the design much. As engineers start exploring a design, they find and fix bugs, and the more they explore, the fewer bugs are left to be fixed. Eventually, it becomes very difficult to detect these rare bugs by random probing. Most of the hardware verification effort goes into finding the few remaining bugs in the design. In fact, this process is so time-consuming that 60-70% of the compute time spent on hardware development goes into verification.

If the random-constraint testing is not good at specifically targeting bugs in the design, what are the alternatives? My team in Arm Research has been working on this problem since last year. We have analyzed our CPU verification data and successfully trained machine learning (ML) models to solve this problem. We deployed an ML application with these models in collaboration with production engineers at Arm, Mark Koob and Swati Ramachandran. Our application uses ML to flag tests that are likely to find bugs. Verification engineers can feed a large group of prospective tests to our application, and then the application returns a subset of them that are likely to find bugs. This way, engineers can focus on these tests only, and reduce the number of tests to run, which eventually saves compute costs.

Currently, our application is being used consistently by Arm verification engineers as a complementary tool to their existing workflow. On average, it has been shown to be 25% more efficient than the default verification workflow in terms of finding bugs, and 1.33x more efficient at finding unique bugs. Through our application, engineers were able to find more unique bugs per test run when compared to their existing verification workflow.

Filtering prospective tests using ML

Now let us take a deeper look at how our application works. The main challenge of using ML for hardware verification is that everything revolves around random-constraint testing. Not only is the data collected by this method, but also the testing infrastructure is built to optimize this process. The problem is that random-constraint testing consists of two very different parts:

- Deterministic knob-control by verification engineers, and;

- Non-deterministic subsequent processes that are random and intractable.

This means two things. Firstly, the only source of the usable data is the knobs. Secondly, we cannot guide tests directly to explore new design space because the whole process is non-deterministic.

Let’s first look at the knob data. Here, each sample is a test that was run in the past. It has several hundred knob values (input) and a binary output; bug or bug-free. In our data, bugs were extremely rare (less than 1%). To address this severe class imbalance, we adopt two approaches. Firstly, we train a supervised learning model that computes a probability of having a bug based on a set of knob values. This model detects tests that may expose bugs similar to the previous ones. Secondly, we train an unsupervised learning model that estimates similarity between a new test and the previous tests. If the similarity is low, the test is likely to be novel. Novel knob combinations can probe unexplored design areas and are more likely to expose bugs. In our preliminary results, we found that these models can detect different types of bugs. Since our main goal is to capture as many bugs as possible, we flag a test as a bug provided one of the two models predicts it will be.

To avoid the difficulty in guiding test behavior, we choose a filtering approach. We leave the knob values to be generated randomly from the testing infrastructure and filter them afterwards based on ML prediction scores. To do so, we need to provide the ML models with a large group of knob values (test candidates) first. Luckily, this process is computationally cheap. Then, the ML models compute prediction scores (the probability of having a bug) of the candidates. Based on the scores, we select a subset of the candidates that are more likely to find bugs than others.

Deploying ML within existing random-constraint testing infrastructure

Now that we have trained ML models ready, can we completely replace our existing random-constraint testing flow with our application? The answer is no. The filtering approach, even with the unsupervised learning model, does not completely solve the exploration problem. That is why the existing flow (random-constraint testing) should remain. The random probing can still be useful for exploration to a degree, and can provide new training data for model update. Thus, we propose two parallel pathways; one with the default randomized testing and the other with ML models, where an additional set of test candidates are provided and then only the tests flagged by the models are filtered and run. This way, it is possible to continue collecting novel data from the default flow for exploration while exploiting the data from previous tests via the ML application.

Some may think that our job is done when the models are delivered, and deployment finally happens. This is not true. For the models, a new journey begins when they are deployed in the production environment, because it is likely that unexpected events are waiting for them. For instance, we have learned that design and testing infrastructure go through daily changes, so test behavior and data generation process may change frequently. This means the models deployed in the beginning do not guarantee good performance as time goes by.

To address this, we have conducted research into the optimization of model retraining. We identified how often models need to be retrained and how much data should be used for training. Using this information, we’ve built a retraining module that is automatically invoked periodically or upon verification engineers’ request. During the retraining process, we compare a variety of different models and tune their hyperparameters. This allows for flexibility across changes in data generation processes and various microprocessor designs.

Towards end-to-end ML

When we talk about ML, the focus is often on algorithms. As I mentioned, however, when it comes to building ML products, the algorithms are only a small part of a much larger pipeline. Especially with scalable deployment in consideration. Starting from data acquisition all the way to deployment and assessment, every step requires attention of data scientists and ML researchers. It is because developing ML products is different from typical software development. A lot of processes are interconnected and data-dependent, which makes them more challenging to test and evaluate.

Admittedly, we initially approached the development of this ML application as a “throw it over the wall” type of engagement. After developing the core ML modules (data preprocessing and models), we delivered them to production engineers without much engagement afterwards. After the application was deployed in several projects, we occasionally needed to intervene to deal with unexpected model behavior. We soon realized that not engaging in the deployment process makes it very difficult to solve any issues that happen after model deployment.

Recently, in collaboration with our colleagues, it has been made easier to make direct contributions to packages. They have developed a Python package for data science and ML, which has become a core part of our ML application. This allowed us to directly contribute to the ML application easily, and also enabled scalable Python in Arm’s internal compute platform. Our new team member, Kathrine (Kate) Behrman has already built a new module to fix an existing problem in model validation. With this momentum, we are more engaged in deployment to make our ML application perform better and more reliable, while exploring new research ideas.

Through this process, we learned that getting more involved in ML product development provides many benefits to researchers. First, it makes tech transfer much easier because making direct contributions to deployed products is more frictionless. This also means that we can test our new research ideas easily and measure the effect quickly. In addition, it helps us fix problems efficiently because we have better understanding of how the models are served in deployment. ML products benefit from an evolutionary approach because there is no absolute guarantee that the data or surrounding infrastructure stays the same. Finally, this process naturally brings automation and scalability, which makes our work easily applicable and more impactful.

Next steps

We are currently working on launching a new version of our ML application to accommodate a new CPU project that has launched recently. We expect that the new version can be used in the early stage of verification, which is new territory to us. At the same time, we are exploring various research areas. For example, explainable AI for feature assessment and counterfactuals, various unsupervised learning techniques to target novel bugs more effectively, methods to design ML-friendly test benches, and other verification-related problems such as assessing coverage. We are also putting efforts into standardizing our workflow and automating existing features. We anticipate that our work will bring more consistent and reliable model performance over time. We also expect that it will showcase a successful tech transfer example for ML deployment that can be applied to solving other engineering problems inside Arm with ML.

Learn more about our research in our paper presented at DAC (Design Automation Conference) 2020, and please do reach out to me if you have any questions!